AI detonates the demand for computing power, and the PCB industry ushered in market growth.

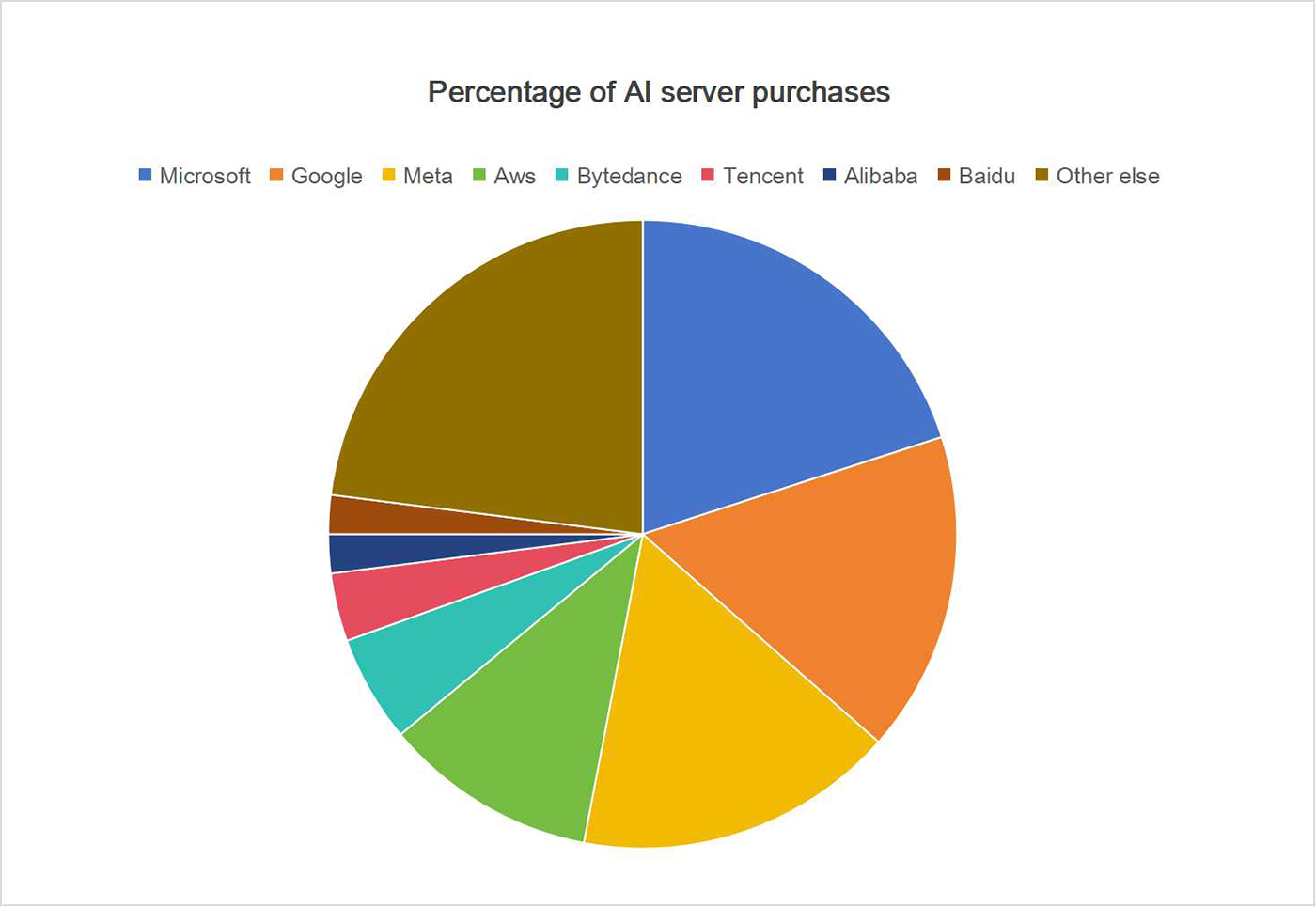

●Domestic large models are released one after another, and large AI models drive the demand for computing power: Baidu released the large AI model "Wen Xin Yi Yan", and Alibaba Cloud officially announced that its large AI model "Tongyi Qianwen" has started invitation testing. Alibaba Damo Academy released the "Tongyi" large model series. The chairman of SenseTime Technology announced the launch of the large model system SenseTime Daily New Large Model System. Domestic AI large models have been released one after another, indicating a further increase in demand for computing power. NVIDIA released a number of products in the AI field at the GTC Developer Conference, including the new GPU H100 designed for large language models (LLM), GPU L4 for AI video generation, AI supercomputing service DGX Cloud, etc., AI iPhone The time has come when generative AI will reshape almost every industry, and Nvidia said the company will fully invest in AI technology and launch new services and hardware to power AI products.

●The value of AI server PCBs has increased significantly: AI servers have higher requirements for chip performance and transmission rates, and usually require the use of high-end GPU graphics cards and higher PCIe standards. The upgrade of server platforms places higher requirements on PCB layers, materials, etc. At present, the mainstream PCB board materials are 8-16 layers, corresponding to PCIe 3.0 is generally 8-12 layers, 4.0 is 12-16 layers, and the 5.0 platform is above 16 layers. From the perspective of material selection, after the PCIe upgrade, the server's material requirements for CCL will reach high-frequency/ultra-low loss/extremely low-loss levels. According to industry research, the current PCB value of the Purley platform that supports the PCIe3.0 standard is about 2,200-2,400 yuan, the PCB value of the Whitley platform that supports PCIe4.0 has increased by 30%-40%, and the PCB value of the Eagle platform that supports PCIe5.0 is higher than that of Purley. Double. According to industry research, general server PCBs mainly use 8-10 layer M6 boards, training server PCBs use 18-20 layer M8 boards, inference server PCBs use 14-16 layer M6 boards, and AI server PCBs are worth 10,000-15,000 yuan. , the value is significantly increased.

●Chinese manufacturers continue to increase their investment in FC-BGA packaging substrates to help domestic AI chip breakthroughs: FC-BGA packaging substrates are mainly used for CPU, GPU and other computing chips, and are basically monopolized by foreign manufacturers. In order to change the situation where FC-BGA packaging substrates are monopolized by foreign countries, domestic manufacturers are gradually promoting local substitution, and PCB manufacturers such as Shennan Circuit, Zhuhai Yueya, and Xingsen Technology are gradually expanding. Domestic manufacturers continue to increase their investment to support the breakthrough of domestic AI chips.

1.Computing power drives demand for AI servers, and PCB industry welcomes development opportunities.

1.1. AI servers are more suitable for large model application scenarios.

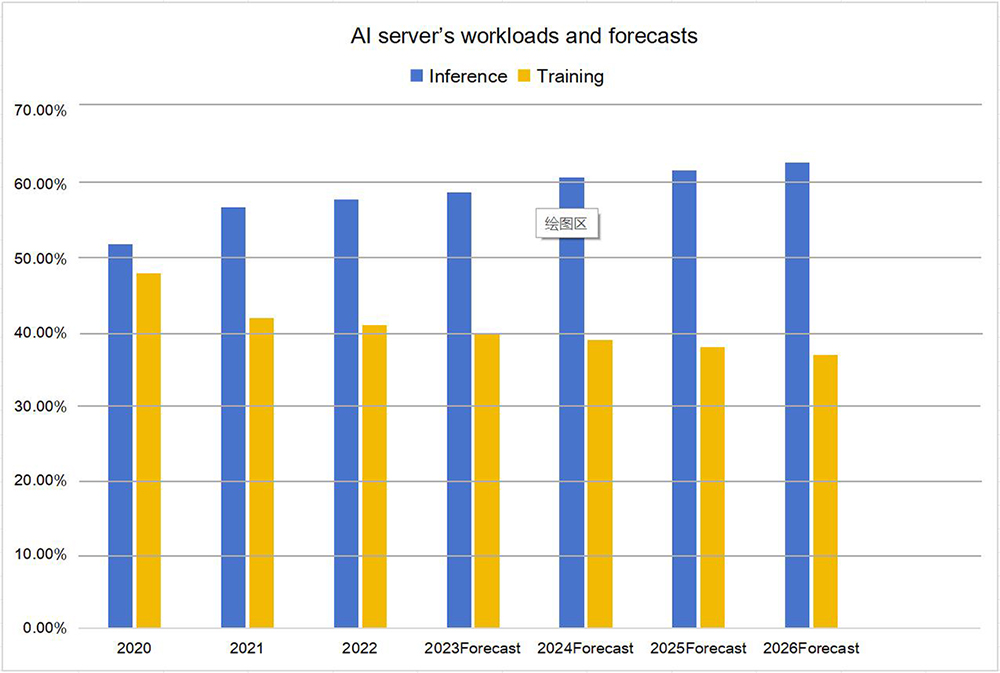

●In large model applications, there are mainly "training" and "inference" scenarios. According to IDC data, 42.4% of China's artificial intelligence server workloads in 2021 will be used for model training, and 57.6% will be used for inference. It is expected that the proportion of inference will increase to 62.2% in 2026.

●The training phase mainly adjusts the parameters of the AI model based on existing data. Through iterative calculations, it effectively handles complex problems including image recognition, language processing, etc., and improves the accuracy of the model to meet expectations. According to NVIDIA data, the 1 trillion parameter large language model Training takes one month with 4096 A100 GPUs and 1 week with 4096 H100 GPUs. For complex problems in the fields of image recognition, speech recognition, and natural language processing, Tianyi Think Tank explains according to OpenAI that the computing power requirement during the training phase is equal to 6 times the product of the number of model parameters and the size of the training data set.

●The inference phase is after the training phase, inferring the input data, and the number of iterations is not as intensive as the "training" phase.

OpenAI states that the computing power requirement of the ChatGPT3 inference phase is twice the product of the number of model parameters and the size of the training data set.

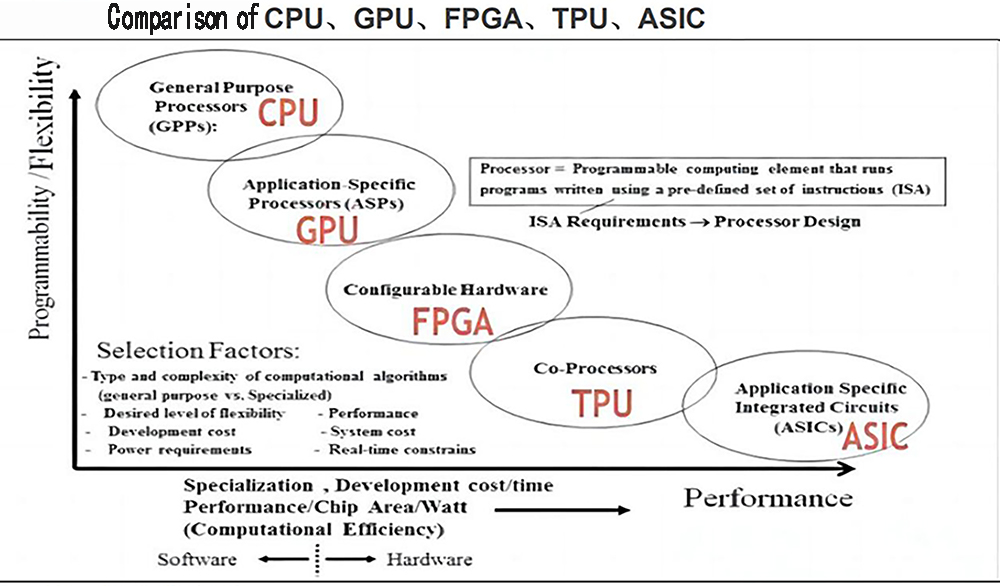

●The AI server is equipped with higher-performance computing cores and larger memory capacity, making it more suitable for large model computing needs. Broken down from the hardware composition, the AI server uses heterogeneous servers as computing sources, mainly including CPU+GPU, CPU+FPGA, CPU+ASIC and other heterogeneous forms. The advantage is that it can adjust the flexibility of the computing module structure according to actual needs. . According to IDC data, GPUs will account for 89% of the market share in China's AI market in 2022. The most popular type in the market is CPU+GPU. The GPU has a large number of units and a long pipeline. It is good at sorting out graphics rendering and can effectively meet the large-scale parallel computing of AI. In the operation of GPU+CPU, the GPU and CPU share data with high efficiency. , tasks are scheduled to the appropriate processor with very low overhead, and no memory copy or cache refresh is required. The CPU has multiple serial parts optimized for serial processing, and the GPU has large-scale parallel parts of small cores running programs, which are the basis for high computing power. The combination of the two is conducive to giving full play to the synergy effect. The AI server can effectively meet the computing requirements of deep learning and neural networks. The application of the AI server to the AI GPU effectively supports multiple matrix operations such as convolution, pooling, and activation functions, which greatly improves the computing efficiency of the deep learning algorithm. Promote the improvement of computing power.

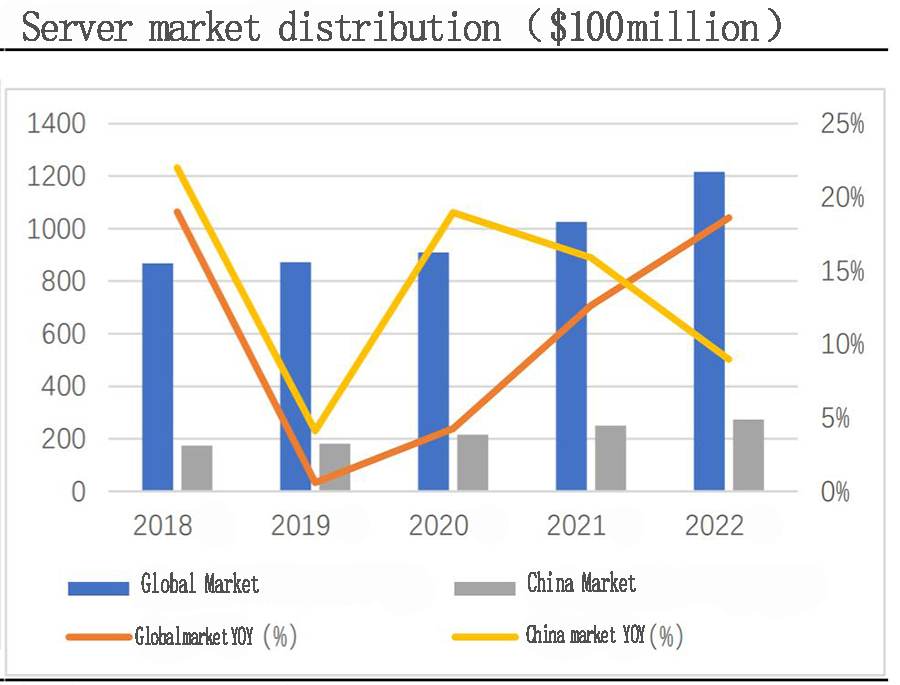

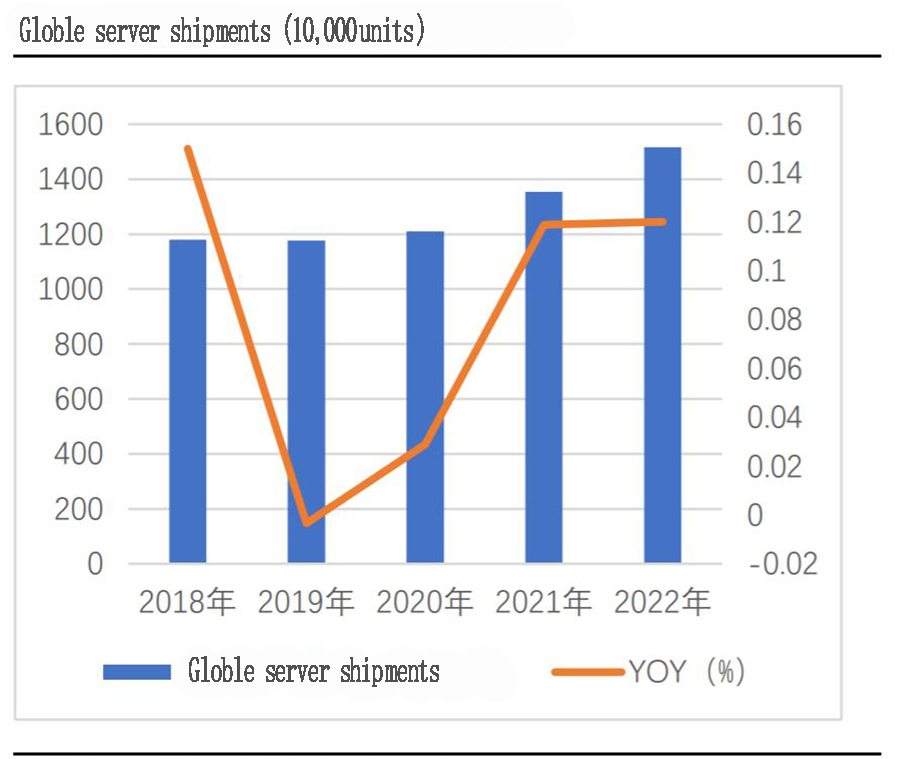

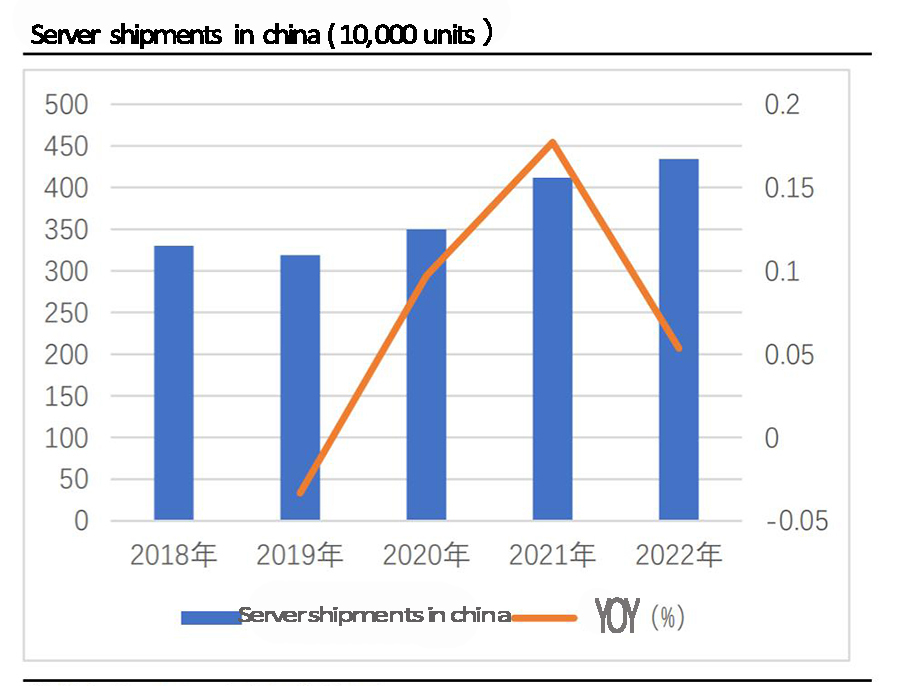

●According to IDC data, the global AI server market size will reach US$31.79 billion in 2025. China’s server shipments in 2022 will be 4.341 million units. According to the forecast of China Business Intelligence Network, China's server shipments are expected to reach 4.49 million units in 2023.

●The development of AI servers will increase the value of PCBs. AI servers have higher requirements for chip performance and transmission rates, and usually require the use of high-end GPU graphics cards and higher PCIe standards. The upgrade of server platforms places higher requirements on PCB layers, materials, etc. At present, the mainstream PCB board materials are 8-16 layers, corresponding to PCIe 3.0 is generally 8-12 layers, 4.0 is 12-16 layers, and the 5.0 platform is above 16 layers. From the perspective of material selection, the material requirements for CCL in servers after PCIe upgrade will reach high-frequency/ultra-low loss/extremely low-loss levels. According to industry research, the current PCB value of the Purley platform that supports the PCIe3.0 standard is about 2200-2400 yuan, the PCB value of the Whitley platform that supports PCIe4.0 has increased by 30%-40%, and the PCB value of the Eagle platform that supports PCIe5.0 is higher than that of Purley. Double. According to industry research, general server PCBs mainly use 8-10 layer M6 boards, training server PCBs use 18-20 layer M8 boards, inference server PCBs use 14-16 layer M6 boards, and AI server PCBs are worth 10,000-15,000 yuan.

1.2. The localization process of IC carrier boards is accelerating, helping domestic AI chip breakthroughs.

●IC packaging substrate is one of the key raw materials in integrated circuit packaging. IC packaging substrate, also known as integrated chip carrier board, provides electrical interconnection, protection, support, heat dissipation, assembly and other functions for the chip. It serves as a bridge connecting bare chips and PCBs, connecting high-density semiconductor chips to the motherboard to transmit electrical signals and power. , is one of the key raw materials for packaging products in the integrated circuit industry.

●Chinese manufacturers are gradually breaking through FC-BGA packaging substrates to support the development of domestic AI chips. In order to change the situation where FC-BGA packaging substrates are monopolized by foreign countries and rely heavily on imports, domestic manufacturers have gradually promoted local substitution. Chinese PCB manufacturers Shennan Circuit, Zhuhai Yueya, Xingsen Technology, etc. are gradually expanding.

●The revenue of representative companies in the localization process of FCBGA packaging substrates is as follows:

*Hushi Electric Co., Ltd., according to Wind data, achieved operating income of 8.336 billion yuan in 2022, compared with 7.418 billion yuan in the same period last year, a year-on-year increase of 12.37%.

*Shennan Circuit, according to Wind data, the company will achieve operating income of 13.992 billion yuan in 2022, a year-on-year increase of 0.36%.

*Xingsen Technology, according to Wind data, Xingsen Technology achieved operating income of 5.354 billion yuan in 2022, a year-on-year increase of 6.23%.

*Shenghong Technology, according to the 2022 annual announcement, the annual report disclosed that the company has invested a total of 287 million yuan in R&D. The company's operating income was 7.885 billion yuan, a year-on-year increase of 6.10%.

●This report is reproduced from Essence Securities. The copyright belongs to Essence Securities Co., Ltd.

Contact: Ella Ouyang

Phone: 86-13570888065

Tel: 86-0755-28632299

Email: ellaouyang@szxpcba.com

Add: Building 8, Gangbei Industrial Zone, Huangtian, Xixiang, Bao’an District, Shenzhen, China.

We chat